It was said somewhere in Lord of the Rings that Wizards 'are subtle and quick to anger,’ and substituting ‘SQL Server’ in this phrase proves to be also true.

Over the last couple of years I have had a number of issues with SQL Server which specifically involve self-referencing updates.

A self-referencing update uses the destination table in the query associated with the update.

These problems occasionally cause catastrophic, unpredictable degradation in query performance which has caused CM syncs to suddenly degrade and in some cases never complete.

It is becoming evident that there is definitely a quirk in SQL Server and this problem appears to occur in all versions I’ve tested so far. The problem is fiendish in that it is sudden, catastrophic and is both sensitive to the data AND the environment in which you perform the query. This means you may see it on one machine, move the exact same database elsewhere and the problem vanishes.

In the most recent example, the fairly simple SQL below exhibits the problem. Note that although this query uses a temp table, the problem has been seen even when the queries involve only permanent tables.

UPDATE ApplicationInstMachine SET aim_installdate_utc = t.installdate

FROM ApplicationInstMachine s

JOIN dbo.#tempApplications t

ON t.uapp_guid = s.aim_uapp_guid AND

t.uslm_smsguid = s.aim_machine_guid AND

(t.installdate IS NOT NULL AND s.aim_installdate_utc IS NULL )

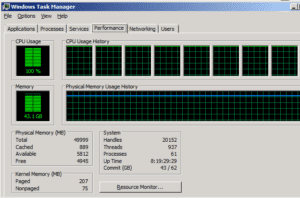

This is one of those issues where a picture is worth a thousand words. Here’s what happened when this query was run. 24 hours later we were still wedged in this state!

In the example above the cardinality of the two tables is approximately 13 million rows and no rows will match the join clause (i.e the data was already updated on a previous sync run and is therefore not updated this time).

We can see that by simply modifying the query to say

SELECT COUNT(*)

FROM ApplicationInstMachine s

JOIN dbo.#tempApplications t

ON t.uapp_guid = s.aim_uapp_guid AND

t.uslm_smsguid = s.aim_machine_guid AND

(t.installdate IS NOT NULL AND s.aim_installdate_utc IS NULL )

This returns 0 rows in approximately 9 seconds on the test environment.

The problem is sensitive both to the data and the environment in which it is run. I could take this same database and bring it up on a different guest, possibly even with the exact same core count and memory, and see completely different behaviour.

Normally this query should complete in just a few seconds or maybe a minute or two at most.

If we refactor the query to create a fresh temp table with the candidate rows, like this, the problem goes away

1. Do the previous update query but just get the candidate rows into a fresh temp table (it will be empty in this case, because there are no candidate rows that match the criteria).

SELECT t.uapp_guid,t.uslm_smsguid,t.installdate

INTO dbo.#tempNewInstallDates FROM ApplicationInstMachine s

JOIN dbo.#tempApplications t

ON t.uapp_guid = s.aim_uapp_guid AND

t.uslm_smsguid = s.aim_machine_guid AND

(t.installdate IS NOT NULL AND s.aim_installdate_utc IS NULL )

2. Now update by joining back to the temp table but without the more complex join clause, because the temp table now has only the applicable data. This query completes in just a few seconds.

UPDATE ApplicationInstMachine SET aim_installdate_utc = t.installdate

FROM ApplicationInstMachine s

JOIN dbo.#tempNewInstallDates t

ON t.uapp_guid = s.aim_uapp_guid AND

t.uslm_smsguid = s.aim_machine_guid

In some cases, the problem can be mitigated by ensuring that SQL Server statistics are updated (so that it has an accurate picture of table cardinality). In theory, these statistics are updated automatically, however, in some cases SQL Server seems not to ever get round to doing it. Statistics can be safely (and quickly) updated at any time by running

Sp_updatestats

Doing this on the target database which exhibited the problem caused the issue to vanish, though it is likely to re-emerge if statistics get out of date again. The refactored query is probably not vulnerable to this issue.

Obviously temp tables may have incorrect estimated cardinality at the time they are used – but SQL Server will in this case assume the table has no rows, as far as I know. It’s not clear how this would cause the problem above, though.

I believe that SQL Server chooses an entirely inappropriate query plan which ( I suspect) in some way involves calculating a huge Cartesian product in memory, if:-

(a) The query is a self-referencing update query involving a JOIN to another table or tables

(b) The JOIN clause is non-trivial (though the above example is pretty trivial, so what constitutes ‘trivial’ is still a little unclear)

(c) Statistics are out of date

Under these circumstances, the query may simply consume enormous amounts of CPU and possibly never complete. Of course, ironically, it is therefore impossible to find out what the actual query plan used was!

Normally I recommend that any scheduled maintenance plan for SQL Server should run sp_updatestats, since it will mitigate this issue and is a generally benign and safe thing to do. However, obviously, as we find queries sensitive to this problem, they are progressively refactored to be resilient to the scenario. Hopefully this will turn out to be the last one!

TeamViewer DEX Helps with

Ensures a smooth and uninterrupted digital experience for employees, minimizing frustrations and unnecessary disruptions.

Optimize processes with enhanced visibility and automation, driving proactive remediations, exceptional service, and reduced costs.

Resolve IT issues proactively and in real-time to reduce service desk incidents, minimize downtime, and maintain smooth, efficient operations.

Identify, notify, and fix compliance drift, digital friction, and end-user frustration issues.

TeamViewer DEX for

Lightweight, always-on communication, real-time actions, and automated client health remediation,

Optimize hardware spend while facilitating proactive hardware asset management

Gain clear insights into software usage and reclaim underused, redundant, or vulnerable software.

Core Capabilities

TeamViewer DEX Platform

Helps IT teams improve end user experience, tighten security, reduce costs, and evolve IT Operations from cost center to strategic enabler.

Dig into original DEX analysis, case studies, on-demand webinars, and more.

Be a DEX leader with useful strategies, how-to guides, and fresh platform updates.

Select your specific use cases to see demo videos narrated by product experts.

Understanding key terms and concepts related to Digital Employee Experience.

What is Digital Employee Experience (DEX)?

From the software and hardware used each day to IT interactions, DEX is the total of all digital touchpoints an employee encounters at work.

Customer Success

How Britain’s Favorite Retailer has transformed endpoint management across more than 1,000 stores with 1E

About 1E

At 1E, we reimagine how technology serves people and create new ways for IT to shape the future of work.